Improving fine motor skills of neural prostheses

Carrying shopping bags, pulling a thread into the eye of a needle – power and precision grips are part of our everyday lives. We only realize how important (and great) our hands are when we can no longer use them, for example due to paraplegia or diseases such as ALS, which cause progressive muscle paralysis.

In order to help patients, scientists have been researching neuroprostheses for decades. These artificial hands, arms or legs could give people with disabilities their mobility back. Damaged nerve connections are bridged via brain-computer interfaces that decode the signals from the brain, translate them into movements and can thus control the prosthesis. Until now, however, hand prostheses in particular have lacked the necessary fine motor skills to be used in everyday life.

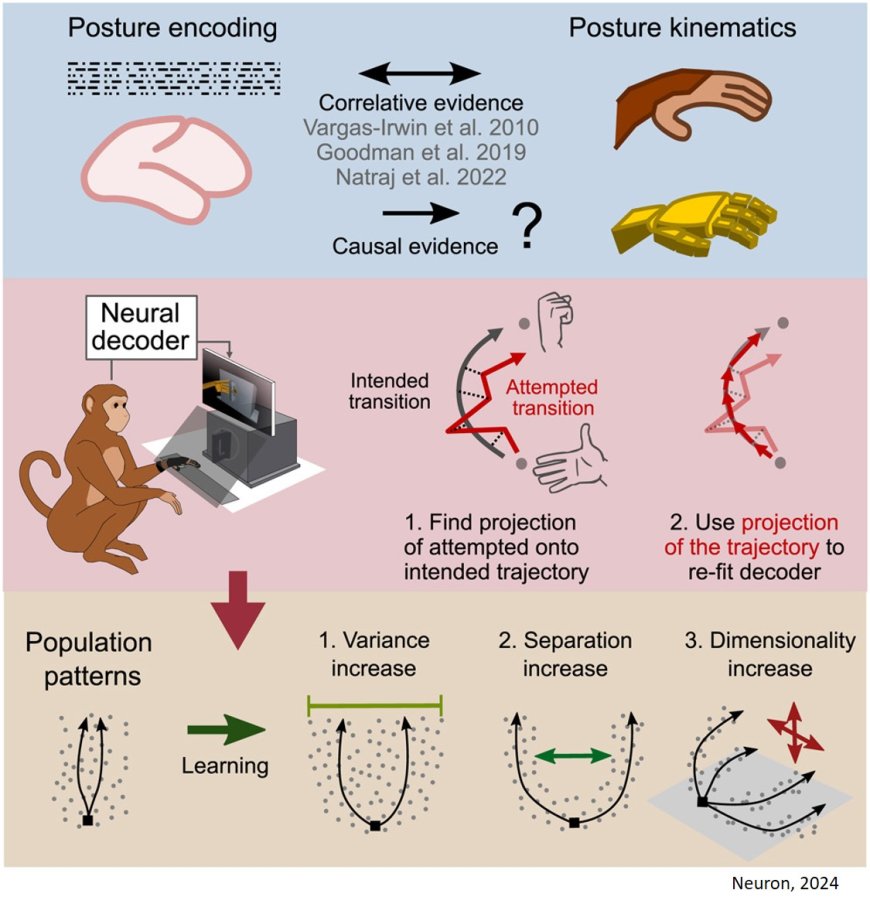

“How well a prosthesis works depends primarily on the neural data read by the computer interface that controls it,” says first author of the study. “Previous studies on arm and hand movements have focused on the signals that control the velocity of a grasping movement. We wanted to find out whether neural signals representing hand postures might be better suited to control neuroprostheses.”

For the study, the researchers worked with rhesus monkeys (Macaca mulatta). Like humans, they have a highly developed nervous and visual system as well as pronounced fine motor skills. This makes them particularly suitable for researching grasping movements.

To prepare for the main experiment, the scientists trained two rhesus monkeys to move a virtual avatar hand on a screen. During this training phase, the monkeys performed the hand movements with their own hand while simultaneously seeing the corresponding movement of the virtual hand on the screen. A data glove with magnetic sensors, which the monkeys wore during the task, recorded the animals' hand movements.

Once the monkeys had learned the task, they were trained to control the virtual hand in a next step by “imagining” the grip. The activity of populations of neurons in the cortical brain areas that are specifically responsible for controlling hand movements was measured. The researchers focused on the signals that represent the different hand and finger postures, and adapted the algorithm of the brain-computer interface, which translates the neural data into movement, in a corresponding protocol.

“Deviating from the classic protocol, we adapted the algorithm so that not only the destination of a movement is important, but also how you get there, i.e., the path of execution,” explains the author. “This ultimately led to the most accurate results.”

The researchers then compared the movements of the avatar hand with the data of the real hand that they had previously recorded and were able to show that these were executed with comparable precision.

“In our study, we were able to show that the signals that control the posture of a hand are particularly important for controlling a neuroprosthesis,” says the senior author of the study. “These results can now be used to improve the functionality of future brain-computer interfaces and thus also to improve the fine motor skills of neural prostheses.”