An open AI model to interpret chest X-rays

Can artificial intelligence, or AI, potentially transform health care for the better?

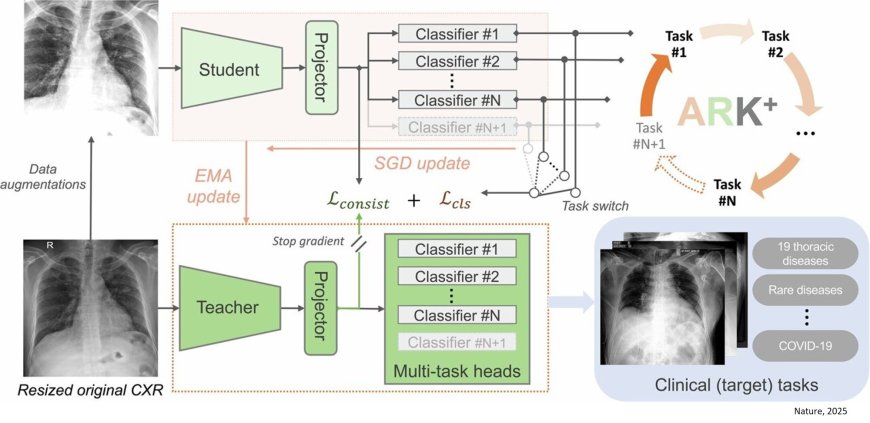

Now, rising to the challenge, researchers built a powerful new AI tool, called Ark+, to help doctors read chest X‑rays better and improve health care outcomes.

“Ark+ is designed to be an open, reliable and ultimately useful tool in real-world health care systems,” said the lead author of the study recently published in the journal Nature.

In a proof-of-concept study, the new AI tool demonstrated exceptional capability in diagnosis, from common lung diseases to rare and even emerging ones like COVID-19 or avian flu. It also was more accurate and outperformed proprietary software currently released by industry titans like Google and Microsoft.

“Our goal was to build a tool that not only performed well in our study but also can help democratize the technology to get it into the hands of potentially everyone,” said the author. “Ultimately, we want AI to help doctors save lives.”

Patients want to live healthier lives and have better outcomes. And doctors want to make sure to get the diagnosis right the first time for better patient care.

The research team wanted to use AI to help interpret the most common type of X-ray used in medicine, the chest X-ray.

Chest X-rays are a big help for doctors to quickly diagnose various conditions affecting the chest, including lung problems (like pneumonia, tuberculosis or Valley fever), heart issues, broken ribs and even certain gut conditions.

But sometime, they can be hard to interpret, even for experienced physicians, or they may miss diagnosing rare conditions or emerging diseases, as was seen in the first year of the COVID-19 pandemic.

The Ark+ tool makes chest X-rays easier by reducing mistakes, speeding up diagnosis and making the technology more equitable by providing top‑quality AI health tools free and open access worldwide.

“We believe in open science, said the author. “So, we used a public data and a global data set as we think this will more quickly develop the AI model.”

AI works by training computer software on large data sets, or in the case of the Ark+ model, a total of more than 700,000 worldwide images from several publicly available X-ray datasets.

The key difference-maker for Ark+ was adding value and expertise from the human art of medicine. The team critically included all the detailed doctors’ notes compiled for every image. “You learn more knowledge from experts,” said the author.

These expert physician notes were critical in the Ark+ learning and getting more and more accuracy as it was trained on each data set.

“Ark+ is accruing and reusing knowledge,” said the author, explaining the acronym. “That's how we train it. And pretty much, we were thinking of a new way to train AI models with numerous datasets via fully supervised learning.”

“Because before this, if you wanted to train a large model using multiple data sets, people usually used self-supervised learning, or you train it on the disease model, the abnormal, versus a normal x-ray.”

Large companies like Google and Microsoft have been developing AI healthcare models this way.

“That means you are not using the expert labels,” said the author. “And so, that means you throw out the most valuable information from the data sets, these expert labels. We wanted AI to learn from expert knowledge, not only from the raw data.”

The new tool may be the slingshot needed to give medicine a boost, as it was shown to outperform private and property software developed by giants.