AI Agents in Virtual Lab Design New SARS-CoV-2 Nanobodies

Imagine you’re a molecular biologist wanting to launch a project seeking treatments for a newly emerging disease. You know you need the expertise of a virologist and an immunologist, plus a bioinformatics specialist to help analyze and generate insights from your data. But you lack the resources or connections to build a big multidisciplinary team.

Researchers now offer a novel solution to this dilemma: an AI-driven Virtual Lab through which a team of AI agents, each equipped with varied scientific expertise, can tackle sophisticated and open-ended scientific problems by formulating, refining, and carrying out a complex research strategy — these agents can even conduct virtual experiments, producing results that can be validated in real-life laboratories.

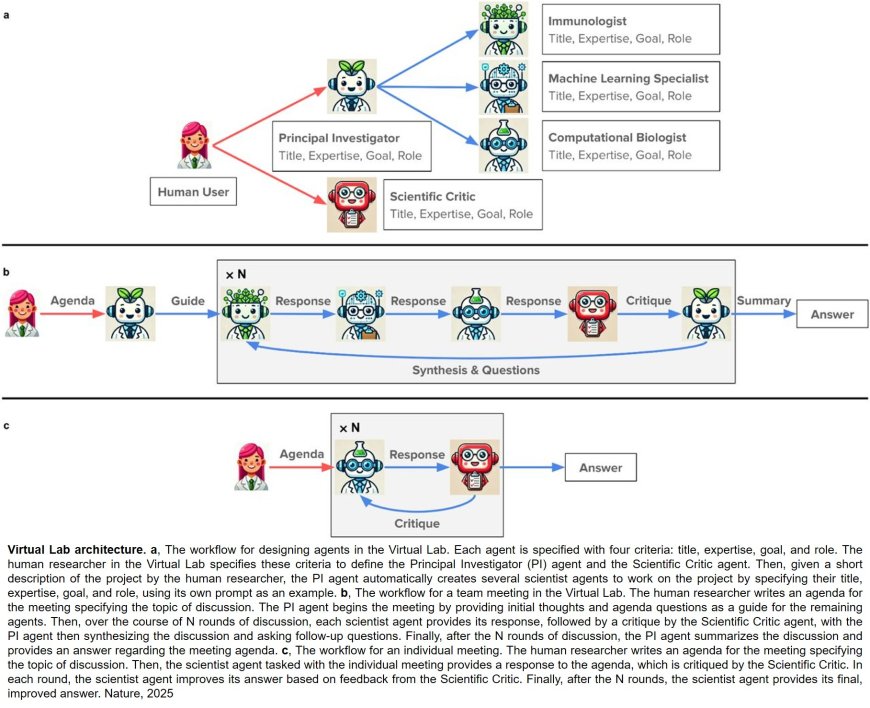

In a study published in Nature the researchers describe their Virtual Lab platform, in which a human user creates a “Principal Investigator” AI agent (the PI) that assembles and directs a team of additional AI agents emulating the specialized research roles seen in science labs. The human researcher proposes a scientific question, and then monitors meetings in which the PI agent exchanges ideas with the team of specialist agents to advance the research. The agents are run by a large language model (LLM), giving them scientific reasoning and decision-making capabilities.

The authors then used the Virtual Lab platform to investigate a timely research question: designing antibodies or Nanobodies to bind to the spike protein of new variants of the SARS-CoV-2 virus. After just a few days working together, the Virtual Lab team had designed and implemented an innovative computational pipeline, and had presented the authors with blueprints for dozens of binders, two of which showed particular promise against new SARS-CoV-2 strains when subsequently tested in the lab.

“What was once this crazy science fiction idea is now a reality,” said an author. “The AI agents came up with a pipeline that was quite creative. But at the same time, it wasn’t outrageous or nonsensical. It was very reasonable – and they were very fast.”

It’s become increasingly common for human scientists to employ LLMs to help with science research, such as analyzing data, writing code, and even designing proteins. The authors Virtual Lab platform, however, is to their knowledge the first to apply multistep reasoning and interdisciplinary expertise to successfully address an open-ended research question.

In addition to the PI agent and specialist agents, their Virtual Lab platform includes a Scientific Critic agent, a generalist whose role is to ask probing questions and inject a dose of skepticism into the process. “We found the Critic to be quite essential, and also reduced hallucinations,” the author said.

While human researchers participated in AI scientists’ meetings and offered guidance at key moments, their words made up only about 1% of all conversations. The vast majority of discussions, decisions, and analyses were performed by the AI agents themselves.

In this study, the Virtual Lab team came up with 92 new “Nanobodies” (tiny proteins that work like antibodies), and experiments in the lab found that two bound to the so-called spike protein of recent SARS-CoV-2 variants, a significant enough finding that Pak expects to publish studies on them.

“You’d think there’d be no way AI agents talking together could propose something akin to what a human scientist would come up with, but we found here that they really can,” said the author. “It’s pretty shocking.”

“This project opened the door for our Protein Science team to test a lot more well-conceived ideas very quickly,” the author said. “The Virtual Lab gave us more work, in a sense, because it gave us more ideas to test. If AI can produce more testable hypotheses, that’s more work for everyone.”

The results not only demonstrate the potential benefits of human–AI collaborations but also highlight the importance of diverse perspectives in science. Even in these virtual settings, instructing agents to assume different roles and bring varying perspectives to the table resulted in better outcomes than one AI agent working alone, the authors said. And because the discussions result in a transcript that human team members can access and review, researchers can feel confident about why certain decisions were made and probe further if they have questions or concerns.

“The agents and the humans are all speaking the same language, so there’s nothing ‘black box’ about it, and the collaboration can progress very smoothly,” the author said. “It was a really positive experience overall, and I feel pretty confident about applying the Virtual Lab in future research.”