How brains convert sounds to actions

You hear a phone ring or a dog bark. Is it yours or someone else’s? You hear footsteps in the night — is it your child, or an intruder? Friend or foe? The decision you make will determine what action you take next. Researchers have shed light on what might be going on in our brains during moments like these, and take us a step closer to unravelling the mystery of how the brain translates perceptions into actions.

Every day, we make countless decisions based on sounds without a second thought. But what exactly happens in the brain during such instances? A new study published in Current Biology, takes a look under the hood. Their findings deepen our understanding of how sensory information and behavioural choices are intertwined within the cortex — the brain’s outer layer that shapes our conscious perception of the world.

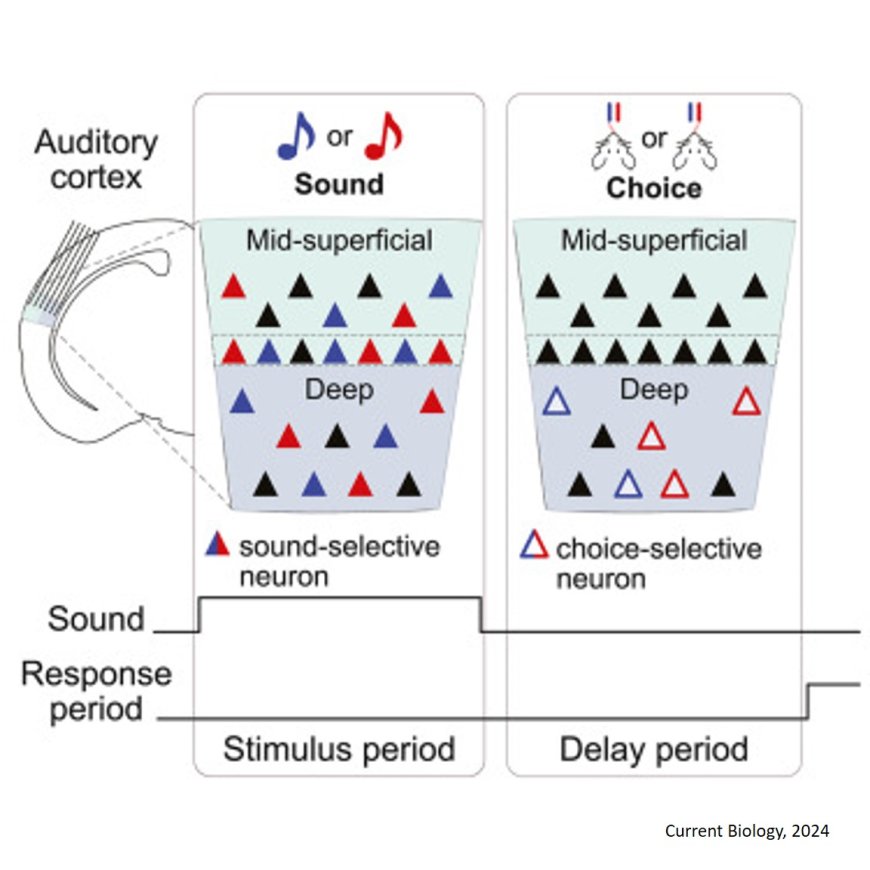

The cortex is divided into regions that handle different functions: sensory areas process information from our environment, while motor areas manage our actions. Surprisingly, signals related to future actions, which one might expect to find only in motor areas, also appear in sensory ones. What are movement-related signals doing in regions dedicated to sensory processing? When and where do these signals emerge? Exploring these questions could clarify the origin and role of these perplexing signals, and how they do — or don’t — drive decisions.

The researchers tackled these queries by devising a task for mice. The study’s lead author, picks up the story: “To unravel what signals related to future actions might be doing in sensory areas, we thought carefully about the task mice would have to perform. Previous studies often relied on “Go-NoGo” tasks, where animals report their choice by either making an action, or not moving, depending on the identity of the stimulus. This setup, however, mixes up signals linked to specific movements with those related to just moving in general. To isolate signals for specific actions, we trained mice to decide between one of two actions. They had to decide if a sound was high or low compared to a set threshold and report their decision by licking one of two spouts, left or right”.

However, this wasn’t sufficient. “Mice quickly learn this task, often responding as soon as they hear the sound”, the author continues. “To separate brain activity related to the sound from that related to the response, we introduced a critical half-second delay. During this interval, the mice had to withhold their decision. Crucially, this delay allowed us to temporally separate brain activity linked to the stimulus from that linked to the choice, and track how movement-related neural signals unfolded over time from the initial sensory input”.

“To dissect neural representations of stimulus and choice, it was also important to design an experiment challenging enough to allow the mice to make mistakes. A 100% success rate would blur the distinction between stimulus and choice, as each stimulus would always elicit the same response. By creating the potential for errors, we could tease apart the neural encoding of the sound from the decisions made”. For instance, in cases where the mice heard the same tone but made different decisions (correct or incorrect), they could examine whether a neuron’s activity varied between the two actions. If so, it would indicate that the neuron encoded information about the choice.

After six months of rigorous training, the researchers could finally begin recording neural activity in mice as they performed the task. They focused on the auditory cortex, the part of the cortex responsible for processing what we hear, which they had already shown was required for the task.

“The cortex of mice and humans is composed of six layers, each with specialised functions and distinct connections to other brain regions”, explains the principal investigator and the study’s senior author. “Given that certain layers typically receive sensory information from brain regions, while others send input to motor centres, we simultaneously recorded activity across the layers of the auditory cortex—for the first time in a task like ours, in which sensory and motor signals could be cleanly separated”.

“We found that sensory- and choice-related signals displayed distinct spatial and temporal patterns”, the senior author continues. “Signals related to sound detection appeared quickly but faded fast, vanishing around 400 milliseconds after the sound was presented, and were distributed broadly across all cortical layers. In contrast, choice-related signals, which indicate the movement the mouse is about to make, emerged later, before the decision was executed, and were concentrated in the cortex’s deeper layers”.

However, despite the temporal separation between stimulus and choice activity, further analysis revealed an intriguing connection: neurons that responded to a specific sound frequency also tended to be more active for the actions associated with tho